I’m currently building a process and interface to crawl known-bad phishing pages, where I take a screenshot and collect other data. That data is going to be used to find similar-looking screenshots and similar-behaving network traffic from streaming logs of visited URLs. This is initially for a talk I’m doing at DeepSec in Vienna this fall, but I hope there’s enough time to make a live website that people can try out instead of only uploading my code to GitHub.

So far, I’ve made a couple things:

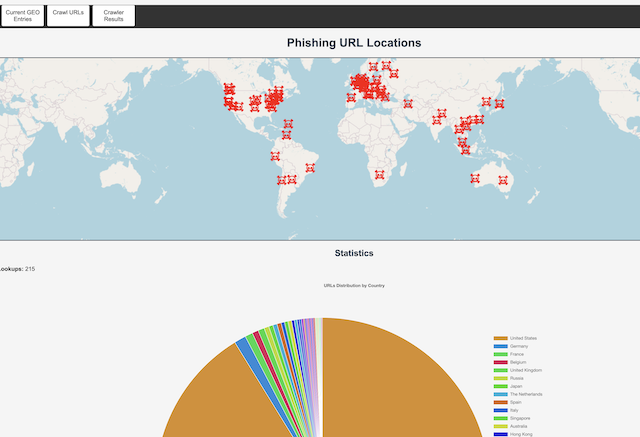

1: A web interface where you can upload a list of URLs and get the approximate physical location of the hosting of those domains/subdomains. This is to support my need to figure out where to put web crawlers.

2: Web crawlers. I run 20 at a time in docker-compose. They accept URLs and domains and will try to get a screenshot and other data. More of them will be built in the locations where I see most phishing coming from.

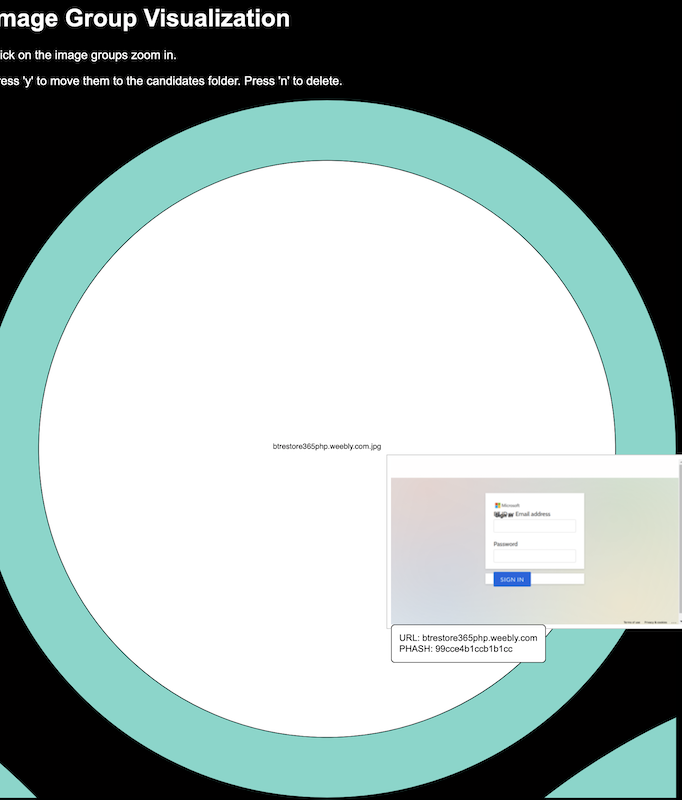

3: An interface to group similar screenshots together in order to easily delete those that don’t contribute to a malicious dataset and to easily keep those that can be added to a malicious dataset.

And I’m working on:

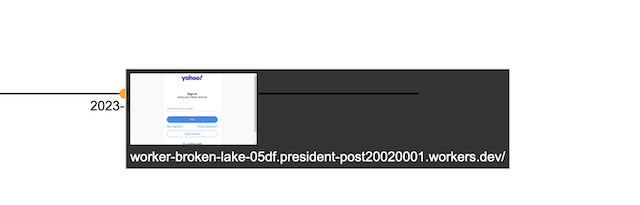

- A sort of ‘analyst interface’ that shows information from the malicious dataset and then any hits for similar websites, all on a timeline. This is a lot of javascript and I do not like javascript…